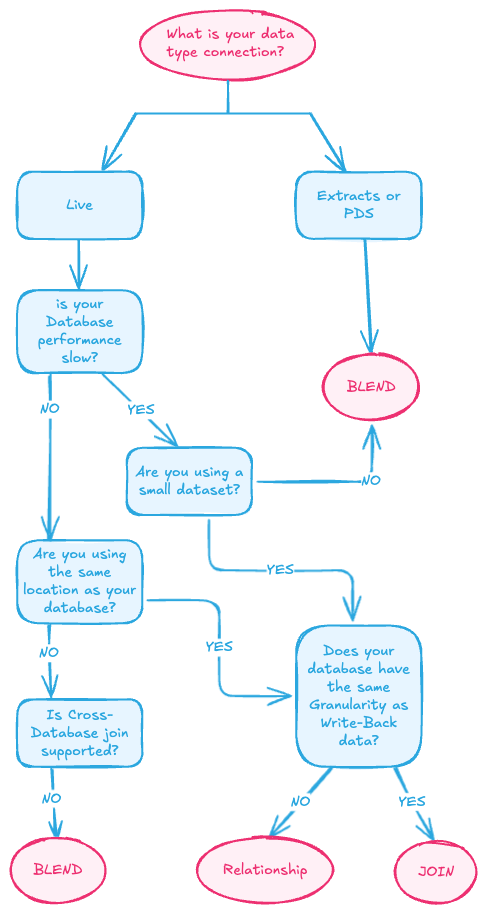

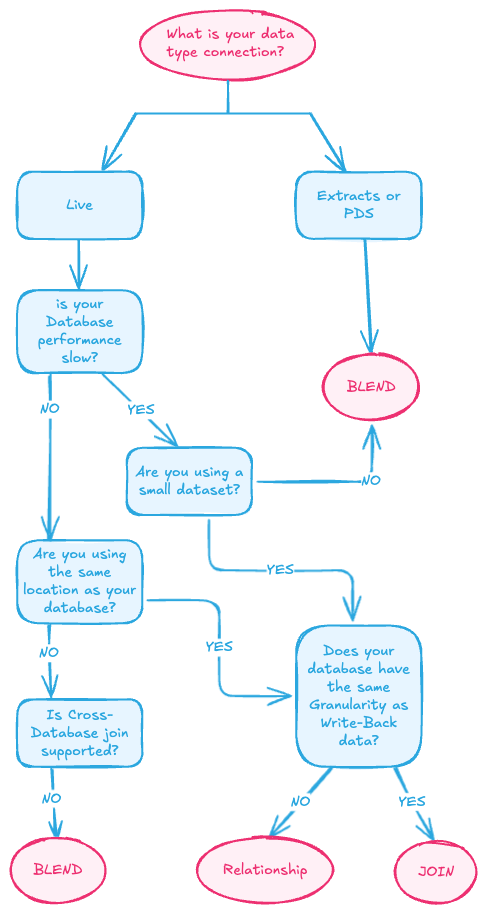

How to Choose the Best Data Connection Approach with WriteBackExtreme

When using WriteBackExtreme (WBE) alongside other data sources, there are several key factors to consider before adding the WBE data set. In this blog post, we’ll define each factor and then walk through a list of possible scenarios to help you find the best connection approach for your specific needs.

1. Live vs Extract data connection

The “Connection Type” refers to whether your primary data source is “Live” (a direct connection to the database) or “Extract” (an offline copy of the data).

• Extracts are an excellent solution for highly-active transactional systems that cannot afford the resources for frequent queries. The extract can be refreshed nightly and is available to users during the day. Extracts can also be subsets of data based on a fixed number of records, a percentage of total records, or filter criteria. The data engine can even do incremental extracts that update existing extracts with new data.

• Live connections are generally slower, especially for large datasets.

• When working with large or complex datasets in Tableau, you can optimize performance by strategically combining data extracts and live connections. Tableau allows you to use different connection types within the same workbook, which can help balance real-time data needs with query speed.

For instance, you might create an extract for large, historical datasets that don’t change frequently, while maintaining a live connection for tables that require up-to-the-minute updates. This approach provides several performance benefits.

2. The performance of your primary database connection

The “Performance” of your primary database connection refers to how quickly it can respond to queries and return data. The “performance” of your primary database connection depends on several factors, including the type of database and the operations being performed. Databases, such as relational databases, are optimized for lightning-fast CRUD operations (Create, Read, Update, and Delete), which make them highly efficient for day-to-day transactional tasks. On the other hand, data warehouses are designed to handle a smaller number of more complex queries over large datasets, making them ideal for analytical tasks.

For example, when displaying data in a Tableau dashboard, operations often involve “SELECT” queries, which are read operations. These are generally faster in data warehouses, as they are optimized for retrieving large volumes of data across multiple tables. However, the speed of your connection also depends on the database’s architecture and how efficiently it processes queries.

If your primary database connection is slow, especially with large data sizes, it can take longer to refresh the data, impacting user experience. To improve performance, consider the type of action you are performing and whether your data source is optimized for it.

3. Small or big sized data sets

The “Data Size” refers to the volume of data that needs to be accessed. For instance data sets below 100k records are small.

4. Connecting to the Same Database vs Different Databases?

This factor determines whether the tables are located in the “Same Database” or “Different Databases.” Using the same database can improve performance, while different databases may require additional processing.

5. Do the granularities match between your data source and WriteBackExtreme data?

The “Granularity of Write-back data” refers to the level of detail maintained when writing data back to the database. “Same Granularity” means the write-back retains the original data structure, while “Different Granularity” indicates the data is modified or aggregated during the write-back process. For instance, if you originally had sales data tracking individual transactions with timestamps, columns for product, price, and customer ID, a same-granularity write-back would preserve all those precise details. In contrast, with Different Granularity”, those individual transactions might get aggregated into daily or monthly sales summaries.

Now that we have the knowledge about different factors let’s go through the possible scenarios and the recommended connection approach

o Youre using an extract.

Regardless of database performance, data size, or granularity, the recommended approach is to use a Blend when working with an extract. (WriteBackExtreme is capable to only refresh a selected datasource, in this case the WriteBackExtreme blended datasource.)

o Published data sources (PDS)

If you’re using a PDS on a cloud or server, the recommended approach is to use a Blend. (WriteBackExtreme is capable to only refresh a selected datasource, in this case the WriteBackExtreme blended datasource.)

o Live connection with slow performance and large data size

In this case, a Blend is required to manage the performance for large, slow databases. (WriteBackExtreme is capable to only refresh a selected datasource, in this case the WriteBackExtreme blended datasource.)

o Live connection with slow performance and small data size

- Same granularity: You can use a Join when performance is sufficient, or a Blend to reduce the query load on the slow database.

- Different granularity: Use a Relationship to manage the mismatched granularity effectively, or a Blend to reduce the query load on the slow database.

o Live connection with fast performance, and same database locations (for fast performance data sources the data size is not important)

- Same granularity: Use a Join.

- Different granularity: Use a Relationship to manage the mismatched granularity effectively.

o Live connection with fast performance, and different database locations (for fast performance data sources the data size is not important)

1. If cross-database joins are not supported, use a Blend regardless of granularity. (WriteBackExtreme is capable to only refresh a selected datasource, in this case the WriteBackExtreme blended datasource.)

2. If Cross-DataBase join is supported, then for:

-

Same granularity: Use a join

-

Different granularity: Use a Relationship to manage the mismatched granularity effectively.

Ready to Transform Your data analytics? Download your extension for free and start now!